visionOS and XR events

In the past year I’ve had the opportunity to participate in two visionOS hackathons and two XR conferences – indications that mixed reality is still going strong in 2024.

VisionDevCamp happened at the end of March in Santa Clara, CA and was my favorite for a variety of reasons including that it was a chance to reconnect with my friend Raven Zachery who ran the event with Dom Sagolla. I also got to meet a ton of Unity and iOS developers from around the country who are now the foundation of the visionOS community.

Coming out of that, Raven was asked to organize a workshop around visionOS for AWE in mid-June in Long Beach, CA. I had lots of plans about various rabbit holes I could go down about visionOS development but Raven encouraged me to keep things high level and strategic and I’m glad he did.

In September I had my second hackathon of the year at Vision Hack, an online event around visionOS organized by Matt Hoerl, Cosmo Scharf and Brian Boyd, jr. I basically spent three days online mentoring and helping anyone I could. That was a blast and I really loved all the skills and enthusiasm everyone brought to the event.

Then this past week I participated in the Augmented Enterprise Summit in Dallas, TX. I was on a panel there with Mitch Harvey, Andy Hung, Lorna Jean Marcuzzo and Mark Sage.

In that time, I also did a short course for XR Bootcamp to help Unity developers become familiar with Xcode and visionOS and participated in putting together a couple of proposals for the Meta Quest Lifestyle accelerator program.

It’s been a busier year than I really expected. I’m very happy to be closing in on almost a decade of AR headset development.

Vision Pro Windows, Volumes and Spaces reconsidered

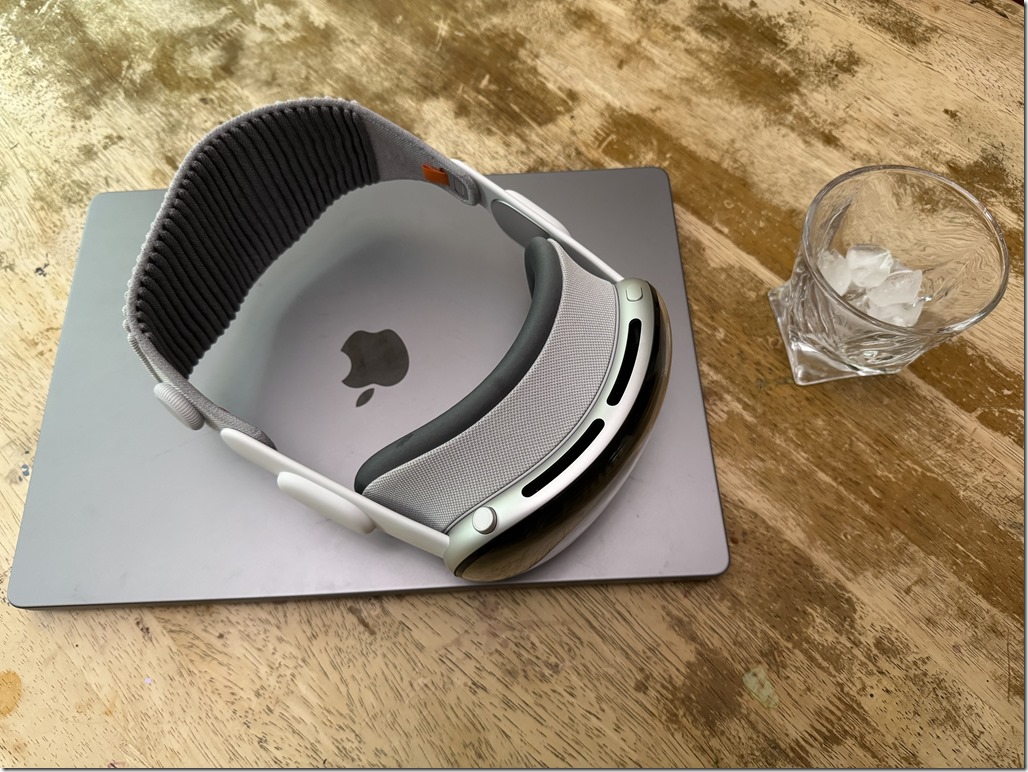

Seeing double (resolution) with the Apple Vision Pro

This is just to provide a small correction to people who say that the Apple Vision Pro has an 8K display. It doesn’t really. It has an approximate 6K total display.

The error comes because Apple very carefully says it has a 4K resolution per eye: “And with more pixels than a 4K TV for each eye, you can enjoy stunning content wherever you are — on a long flight or the couch at home”.

Apple also very carefully states it has a total display resolution of 23 million pixels. Everything else is inference.

This is because we are dealing with two different units of measure, with pixels being equivalent to something like an inch, while a “K” is something more like a square inch.

For example when we discuss a 2K TV, the “2K” represents the lengthwise dimension. What we actually mean by “2K” is a resolution with the dimensions 1920 pixels by 1080 pixels.

In turn a “4K” TV has dimensions of 3840p x 2160p. It is more than double the total pixel count since we double both the width and the height of the 2K resolution.

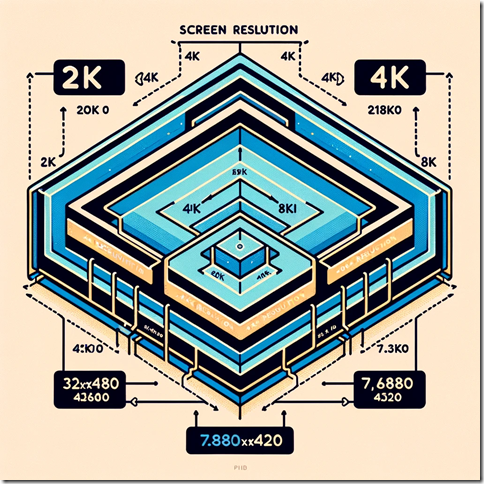

A 2K resolution has a 2 million pixel density. A 4K resolution has 4 times the pixel density of a 2K screen, not 2 times. Here’s a listing of the currently available screen resolutions and their entailed pixel density:

2K = 1920 x 1080 = 2,073,600 pixels

4K = 3840 x 2160 = 8,294,400 pixels

5K = 5120 x 2880 = 14,745,600 pixels

6K = 6144 x 3160 = 19,415,040 pixels

7K = 7168 x 4032 = 28,901,376 pixels

8K = 7680 x 4320 = 33,177,600 pixels

So if the Apple Vision Pro has a 3660p x 3200p per eye, or 11,712,000 pixels. That’s actually a little bit more than a 4K resolution.

People get to the 8K number by inferring that two 4K lenses add up to an 8K lens. But from the chart above you can see that an 8K resolution actually resolves to about 33 million pixels. The Apple Vision Pro only claims a 23.4 million pixel resolution (11,712,000 x 2 = 23,424,000).

Again referring to the chart above, 23 million pixels is closer to 6K than it is to 8K. So, while Apple marketing doesn’t talk about it in this way, if we wanted to, we would have to say that the Apple Vision Pro provides a 6K total display resolution, not an 8K total display resolution.

A similar confusion between linear measurement and area measurement led Alex Kipman to claim that the HoloLens 2 had twice the field of view of the HoloLens 1. The HoloLens two’s resolution encompassed twice the area of the Hololens 1, but unfortunately that’s not how FOV is typically measured. As with the jump from 4K to 8K, it would have had to contain four times the area in order for the claim to have been true. Karl Guttag thoroughly covered the controversy on his blog.

For lack of a Mac

Whether you decide to develop for the Apple Vision Pro using the native stack (XCode, SwiftUI, RealityKit) or with Unity 3D stack (Unity, C#, XR SDK, PolySpatial), you will need a mac. XCode doesn’t run on Windows and even the Unity tools for AVP development need to run with the Unity for Mac.

Even more, the specs are fairly specific. You’ll need a silicon chip, not Intel (M1, M2 or M3 chips). You’ll need at least 16 Gigs of RAM and at least a 256 GB hard drive.

If you are working in the AR/VR/MR/XR/Spatial Computing/HMD space (what a mouthful that has grown into!) it makes sense to learn as much as you can about the tools used to create spatial experiences. And while the Apple native tools like XCode and the Vision Pro simulator are free (though you’ll need a $100/yr Apple dev account), the hardware requirements still remain as a bit of a barrier to learning AVP development – much less publishing to the AVP.

If you want to develop either VR or AR apps for the AVP using Unity, you’ll need to pay an additional $2K a year for a Unity Pro seat on top of everything you already need for native AVP development.

So I wanted to look into the minimum cost just to learn to develop for the Vision Pro.

This begins with a $100 Apple developer account. It also assumes that to learn the skills, you don’t necessarily need to spend $3500 for an AVP device. The simulator that comes with XCode is actually very good and supports the ability to simulate most of the AVPs capabilities except for SharePlay. This should be welcome news to the vast number of European, Asian and South American AR/VR devs who won’t have access to headsets for anywhere from six months to a year or more.

So let’s start at $100. The next step is looking into the least expensive hardware options.

Cloud-based

The least expensive, temporary solution to get hardware is to rent time in a cloud solution.

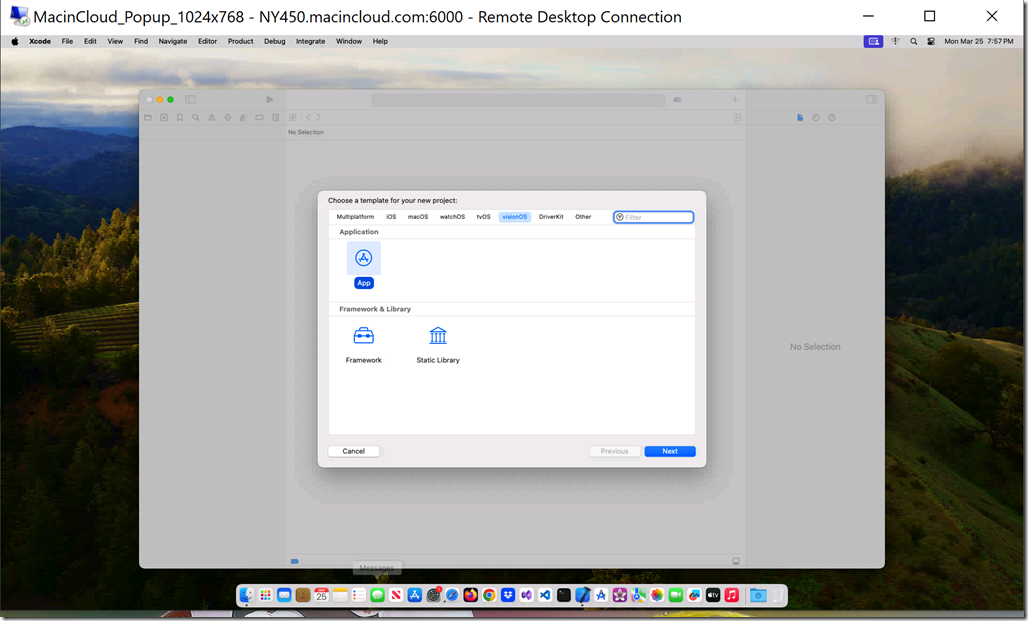

MacInCloud has a VDI solution for Mac minis running an M2 chip with 16 GB of RAM and MacOS Sonoma with XCode 15.2 already on it for $33.50 a month with a 3 hour a day limit. In theory this is a nice solution to start learning AVP development for a few months while you decide if you want to invest more in your hardware.

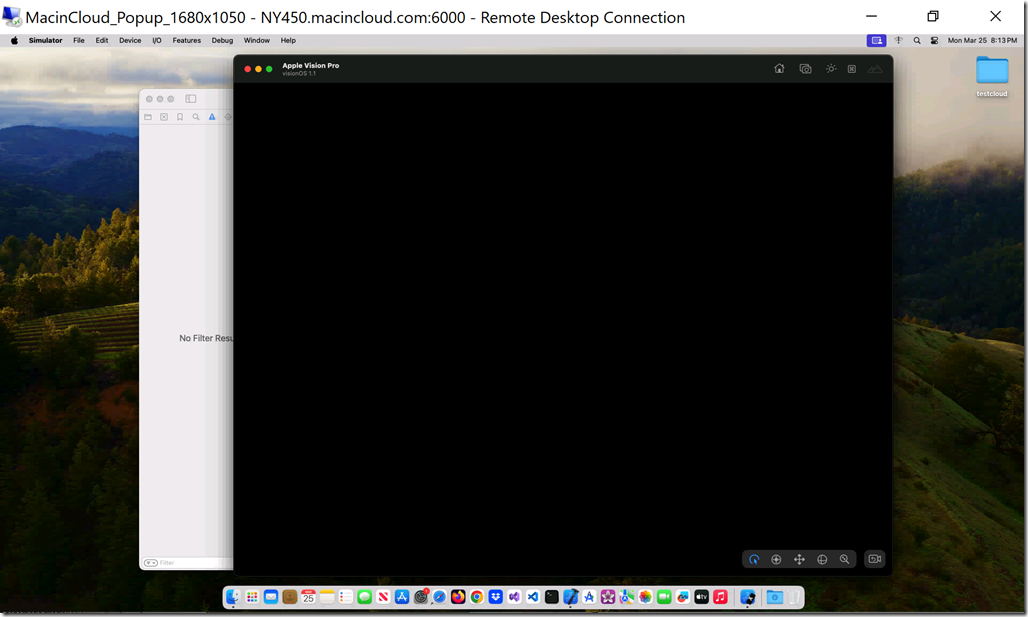

There are some problems with the solution as is, though. First, you are RDPing into a remote machine. If you’ve ever used remote desktop before, you’ll know that there are weird screen resolution issues and, even worse, screen refresh issues with this. It can feel like programming under water. This is why it can only be a temporary solution at best. Next, it includes an older version of XCode – we’re currently up to version 15.3. You can request to have additional or updated software installed, so this is really only a problem of convenience, though it is nice to be able to install and uninstall software when you need to. The good news was that I was able to write a simple AVP project using MacInCloud’s remote mac. The bad news is that the XCode preview window never started rendering and, even worse, the XCode Simulator running VisionOS 1.1 always rendered a black screen.

The rendering problem makes this solution unusable, unfortunately. Once they solve it, though, I think MacInCloud could be a viable solution for learning to develop for the Vision Pro.

New vs Used

If a remote solution isn’t currently viable, then the next step is to figure out what it costs to get a minimum spec piece of hardware on the secondary market.

Currently (March, 2024) a new Mac mini with 16 GB RAM, a 256 GB SSD, a standard M2 chip, a power cord and no monitor, keyboard or mouse, costs $800 or $66.58/mo.

A 13-inch MacBook Air with similar specs runs $400 more. An iMac with an M3 chip is $700 more. An M3 Macbook Pro is $1000 more. A bottom of the line M2 Max Mac Studio with 32 GB RAM and 512 GB SSD is $1100 more.

The cadillac model, for reference, would be a 16-inch MacBook Pro with an M3 Max chip, 96 GB of RAM and a 1 TB SSD for $4299.

________________________________________________________________________________

Apple sells refurbished computers, also. A refurbished 13-inch MacBook Air with M2/16/256 is currently selling for $1,019.

Back Market is a good site for used Macs. I found a 13-inch MacBook Pro there with M1/16/256 for $804 and a similarly spec’d MacBook Air for $725, as well as an M2 MacBook Air for $949. Your milleage may vary.

MacOfAllTrades has a used MacBook Pro M1/16/512 for $700.

MacSales has a used Mac Mini M1/16/256 for $499.

Summary

If you want to learn Apple Vision Pro right now and you are coming from Windows development, your startup cost is going to be around $600:

- $100 Apple Developer Account

- $499 min spec hardware to run XCode 15.3 and the VisionOS simulator

If you are just learning, then the Simulator has all the functionality you need to try out just about anything you can build (again, minus SharePlay and Personas). If you are very bold, you might even be able to publish something to the store to recoup some of your investment.

If you want to develop for the AVP with Unity 3D, your startup costs go up to $2600:

- $2,040 Unity Pro license

If you are coming from HoloLens/MagicLeap/Meta Quest development, the question here might just be how much you are willing to pay to not have to learn Swift and RealityKit.

Video capture tips for the Apple Vision Pro

You can take a snapshot of a spatial computing experience in the Apple Vision Pro simply by pressing both the crown button and the top button at the same time.

This doesn’t work for video capture, unfortunately. Video capture on the Apple Vision Pro takes spatial video of the actual world, only, and won’t create a composite video of the physical and the digital worlds.

If you want to take a video of a spatial computing experience, you will need to stream what you see to a MacBook or an iPhone, iPad or AppleTV.

There are two ways to record from the Apple Vision Pro.

- Mirror to another device (stream and then record).

- Use the developer capture tool in Reality Composer Pro (record and then stream).

Mirroring is the more flexible way of doing a recording and allows you to record a video of indefinite length. This is great if you want to do a lot of takes or just want a lot of footage to use.

Using Reality Composer Pro is more complicated and only lets you capture 60 seconds of video at a time. The advantage capturing video this way is that the quality of the video is much higher, at 30 FPS using 10-bit HEVC.

All the advice I’m passing on to you, by the way, I learned from Huy Le, who is an amazing cinematographer of digital experiences.

Mirror to another device

You can mirror what you see inside the Apple Vision Pro to a MacBook, iPhone, iPad or an Apple TV. This is a really handy feature for doing demos or even if you just want to guide someone through using the device or using an app for the first time. It’s also great for user testing your apps.

In order to mirror, you will need to have both the Apple Vision Pro and the device you are streaming to on the same WiFi network.

1. Inside the Apple Vision Pro, look up – way, way up. A down arrow in a circle will appear at the top of your view. Focus your eyes on it and tap.

2. Next, select the third icon from the left showing two ellipses stacked on top of each other. This is the control center button.

3. On the next screen, select the fourth icon from the left showing two rounded rectangles overlapping. This is the “mirror my view” icon.

4. The final screen will provide you with a list of devices on your WiFi network that you can stream to. Tap on one of these to select it.

5. You will now have a steady 2D video with very little lag streaming to your device. I typically stream to my MacBook. From the MacBook there are two common ways to record your stream. One is to use the built in recording tool. Type Ctrl-Shift-5 to start recording (it is a good idea to start your recording before you begin streaming). The built-in tool is convenient but sometimes it is hard to know if it is recording or not. The second way to record your stream is to download OBS, which is a popular open source screen capture tool, and just use that. OBS gives you a lot of fine-grain control over your recording.

6. Because the Apple Vision Pro can shake while you are wearing it, it’s a good idea to run your footage through video editing software like Adobe Premier Pro and apply a stabilizer effect to it.

Reality Composer Pro

For the highest quality capture (for instance if you are doing promotional footage of your app) you should use the developer capture tool in Reality Composer Pro.

1. To use developer capture, your AVP and your MacBook should be on the same WiFi network. Additionally, the AVP needs to be paired with your MacBook. You may also need to have “developer mode” turned on on your Vision Pro.

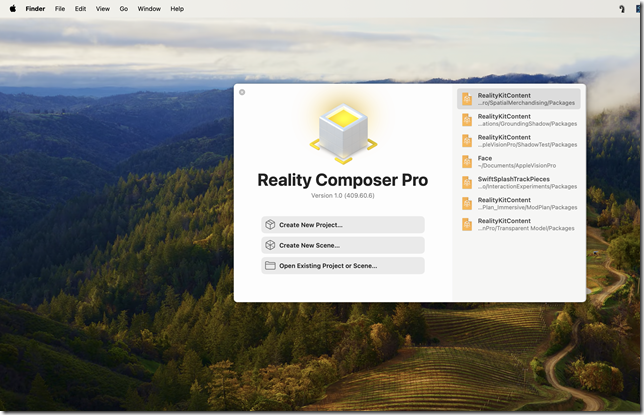

2. To open Reality Composer Pro, you first need to open XCode on your MacBook. (If you don’t have XCode 15 installed, you can download it from the Apple site.) Select “Open Developer Tool” on the XCode menu. Then select “Reality Composer Pro”.

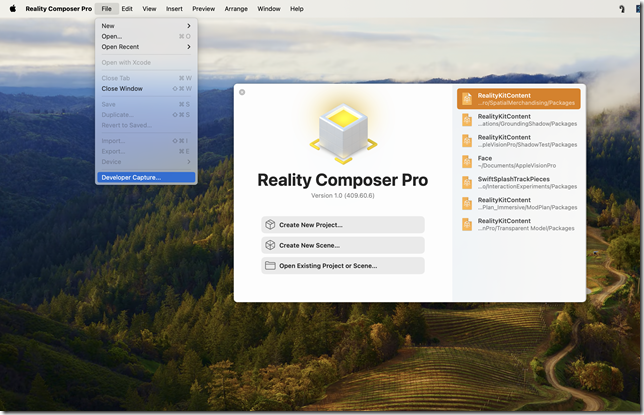

3. In Reality Composer Pro, select “Developer Capture…” from the File menu.

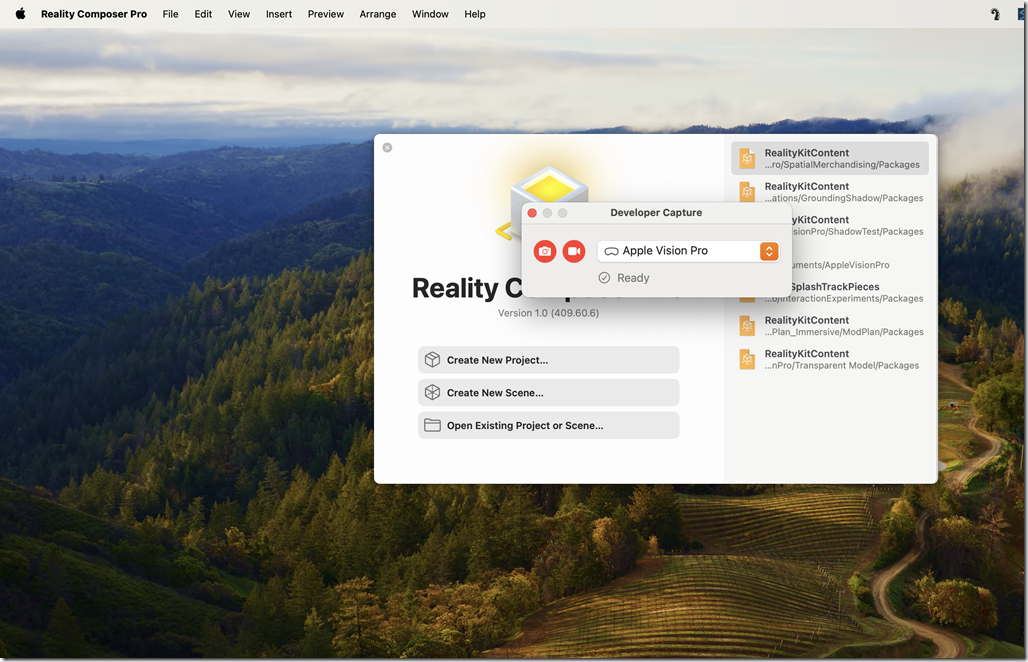

4. Select your Apple Vision Pro device from the drop down menu and click on the record button. This will record on device and then push the completed video to your MacBook when it is done.

5. When the recording is finished it will be deposited on your MacBook desktop as a QuickTime movie.

A note about video capture of in-app video – you can’t

You cannot use either of these techniques to capture a spatial computing experience that incorporates video. If you try to do video capture of apps like the IMAX app or the Disney Plus app, the in-app video will cut out when you begin recording. This may be a DRM feature – but I suspect it might actually be some sort of performance lock on the video buffer.

The (Missing) Apple Vision Pro Developer Ecosystem

When the app store became available on the iPhone 3G in July of 2008, it had 500 native apps available. When the Apple Vision Pro comes out on February 2, 2024, it will reportedly have around 200* native apps available for download.

These numbers are important in understanding how what is occurring this week fits into the origin myth of the smartphone in late capitalist popular culture.

Here I don’t use the term “myth” to mean that what we understand about the origins of the smartphone are untrue – that the app store was Apple’s “killer app”, that a sui generis army of developers became enamored of the device and built a developer ecosystem around it, that the number of native apps, mostly games, built for the iPhone grew rapidly, even exponentially (to 100,000 by 2010), that someone attached a long tail to the app store beast so that a handful of popular apps captured the majority of the money flooding into the app store, and so on. This is our established understanding of what happened from 2008 to the present. I don’t intend to undermine it.

Rather, I’m interested in how this first cycle of the Apple device story, the original course, affects the second cycle, the ricorso. After Apple reached a saturation point for its devices in its primary, affluent markets, it was able to shift and make profits from the secondary market by selling re-branded older phones to the second and third world. But towards the end of the 20-teens, it was clear that even these secondary markets had hit a saturation point. People were not updating their phones as frequently as they used to, worldwide, and this would eventually hit the bottom line. The corso was reaching its period of decline; the old king was wounded; and in this period of transition the golden bough needs to be recovered in order to renew the technological landscape.

In February 2024, Tim Cook, the king of the orchard, is restarting Apple by embracing an iPhone replacement as he prepares to hand his kingdom over to a new king. The roadmap for this is occurring in an almost ritualistic manner, with commercials that echo the best moments of the previous device revolution.

If I am reaching deeply into the collective unconscious to explain what is happening today, it is because modern marketing is essentially myth creation. And Apple has to draw up all of this mythical sense of the importance of the smartphone in order to recreate what it had in 2008. It must draw mixed reality developers into its field of influence in order to create a store that will sustain it through the next 25 years, or risk a permanent decline.

The original 2008 app store, despite a pretty terrible iPhone SDK developer experience, was successful because developers believed in the promise that they could become app millionaires. Secondarily, developers also jumped in because they believed in the romance around the Apple developer ecosystem as a nexus for artists and dreamers.

I believe Apple believes its success is dependent on making an orchard for developers to create in. As beautiful as the new Vision Pro is, it can only be an occasion for the greatness of others. And in order to attract developers into this orchard, Apple must convince them that this has all happened before, in 2008, and that there will be a golden period in the beginning when any app will make money because there are so few apps available – fruit will drop from the trees into developers’ hands and fish will leap from the rivers into their laps. There is a flaw in this myth that, oddly, will ultimately confirm Apple’s preferred narrative.

First and foremost, any developer in the mixed reality space — that is, the space where development for the HoloLens, Magic Leap or the latest generations of Meta Quest occurs – understands that there is a vast crevasse separating the world of MR developers and the world of Apple developers. All the current Apple tools: XCode, SwiftUI, the various “kits”, are built for flat applications. On the other side, development for mixed reality headsets has been done on relatively mature game engines and for the most part on the Unity Engine. These developers understand spatial user interactions and how to move and light 3D objects around a given space, but they don’t know the first thing about those Apple tools. And the learning curve is huge in either direction. It will take a leap of faith for developers to attempt it.

There was originally some excitement about a Unity toolset for Apple Vision Pro mixed reality apps called Polyspatial which seemed promising. Initially, however, it was suggested that the price for using it might be in the tens of thousands**. Later still, it appears that much of the team working on it was affected by the recent Unity layoffs. Had it succeeded, it would have offered a bridge for mixed reality developers to cross. But from the early previews, it appears to still be a work in progress and might still take 6 months or longer to get to a mature point.

I’ve only broken the potential Apple Vision Pro developer ecosystem into two tribes, but there are many additional tribes in this landscape. The current Apple development ecosystem isn’t homogenous, nor is the mixed reality dev ecosystem. Some MR devs come from the games industry and for them MR may be a short detour in their careers. Some MR devs got their big breaks doing original development for the HoloLens in 2016 – these are probably the most valuable devs to have because they have seen 9 years of ups and downs in this industry. There are digital agency natives, who tend to dabble in lots of technologies. There are also veterans of the Creative Technologist movement of 2013, though most of these have gone on to work in AI related fields. The important thing is that none of these people work like any of the others. They have different workflows and different things they find important in coding. They may not even like each other.

Even more vexing — unless you simply want to create a port of an app from another platform — you will probably need a combination of all of these people in order to create something great in the Apple Vision Pro. This isn’t easy. And because it isn’t easy, it will take a lot more time for the Apple Vision Pro to grow its store of native apps than anticipated. This is going to be a long road.

So how long will it take Apple devs to get their heads inside spatial computing? And how long will it take coders with MR experience to learn the Apple tools? To be generous, let’s say that if a small team is very focused and works together well, and if they start today, they will be able to skill up in about five months. They will need an additional 3 months to design and build a worthwhile app. Supposing some overlap between learning and building, let’s say this comes to six months. This is still six months from launch, or sometime in August, before the number of Vision Pro apps starts to pick up.

This is six months in which the availability of native apps for the Vision Pro will be relatively low and in which any decent app will have a good chance of making serious money. The current inventory for Vision Pros in 2024 is estimated to be around 400,000. 400,000 people with Apple Vision Pros, assuming they sell out (and Apple has already sold 200,000 before launch) will be looking for things to do with their devices. It’s a good bet that someone who has paid approximately $2,800 for a spatial computing headset will be willing to spend a few hundred dollars more for apps for their device.

And let’s say an average app will go for $5. Assuming just a quarter of available app purchasers will be interested in buying your app, you could easily make $500,000. This is a decent return for a few months of learning a new software platform. And the sooner you learn it, the more likely you are to be at the root of the long tail rather than at its tip.

Which is to say, even if you attempt to escape the myth that Apple is creating for itself, you will eventually find your way back to it. Such is the nature of myths and the hero’s journey. They are always true in a self-fulfilling way.

_________________________________

* On 2/2/24, the AVP was released with 600 native apps.

** Currently Polyspatial and VisionOS packages for Unity require a $2000/seat/yr Unity Pro license.

New York Augmented Reality Meetup presentation

I did a presentation on ‘Before Ubiquity’ to the New York AR Meetup in November. It went rather well and I hope you like it. Andrew Agus, who’s been keeping the AR flame burning on the east coast for many years — read the original blog post and thought it could be repackaged as a talk – he was right!

The New York Augmented Reality group is one of the OG’s of AR. Among many notable meetings, fireworks flew in the April 11, 2022 “Great Display Debate” between Jeri Ellsworth and Karl Guttag. You’ll come away knowing a lot more about AR and VR after watching it.

Are Prompt Engineering Jobs at Risk because of A.I.?

As you know, many AR/MR developers left the field last year to become prompt engineers. I heard some bad news today, though. Apparently A.I. is starting to put prompt engineers out of work.

A.I. is now being used to take normal written commands — sometimes called “natural” language – sometimes called by non-specialists “language” — and processing it into more effective generative A.I. prompts. There’s even a rumor circulating that some of the prompts being used to train this new generation of A.I. come from the prompt engineers themselves. As if every time they use a prompt, it is somehow being recorded.

Can big tech really get away with stealing other people’s work and using it to turn a profit like this?

On the other hand, my friend Miroslav, a crypto manager from Zagreb, says these concerns are overblown. While some entry level prompt engineering jobs might go away, he told me, A.I. can never replace the more sophisticated prompt engineering roles that aren’t strictly learned by rote off of a YouTube channel.

“A.I. simply doesn’t have the emotional intelligence to perform advanced tasks like creating instructional YouTube videos about prompt engineering. Content creation jobs like these will always be safe.”

Learning to Program for the Apple Vision Pro

Learning to program for the Apple Vision Pro can be broken into 4 parts:

- Learning Swift

- Learning SwiftUI

- Learning RealityKit

- Learning Reality Composer Pro (RCP)

There are lots of good book and video resources for learning Swift and SwiftUI. Not so much for RealityKit or Reality Composer Pro, unfortunately.

If you want to go all out, you should get a subscription to https://www.oreilly.com/ for $499 per yr. This gives you access to the back catalogs of many of the leading technical book publishers.

Swift

To get started on Swift, iOS Programming for Beginners by Ahmad Sahar is pretty good. iOS 17 Programming for Beginners though the official Apple documentation will get you to the same place: https://developer.apple.com/documentation/swift

If you want to dive deeper into Swift, after learning the basics, the Big Nerd Ranch Guide, 3rd edition, is a good read: Swift Programming: The Big Nerd Ranch Guide

But if you want to learn Swift trivia to stump your friends, then I highly recommend Hands-On Design Patterns with Swift by Florent Vilmart and Giordano Scalzo: Hands-On Design Patterns with Swift

SwiftUI

SwiftUI is the library you would use to create menus and 2D interafaces. There are 2 books that are great for this, both by Wallace Wang. Start with Beginning iPhone Development with SwiftUI and once you get that down, continue, naturally, with Wallace’s Pro iPhone Development with SwiftUI .

RealityKit

Even though Apple first introduced RealityKit in 2017, very few iPhone devs actually use it. So even though there are quite a few books on SceneKit and ARKit, RealityKit learning resources are few and far between. Udemy has a course by Mohammed Azam that is fairly well rated. It’s on my shelf but I have yet to start it. Building Augmented Reality Apps in RealityKit & ARKit

Reality Composer Pro

Reality Composer Pro is sort of an improved version of Reality Composer and sort of something totally different. It is a tool that sits somewhere between the coder and the artist – you can create models (or “entities”) with it. But if you’re an artist, you are probably more likely to import your models from Maya or another 3D modeling tool. As a software developer, you can then attach components that you have coded to these entities.

There are no books about it and the resources available for the previous Reality Composer aren’t really much help. You’ll need to work through Apple’s WWDC videos and documentation to learn to use RCP:

WWDC 2023 videos:

Explore materials in Reality Composer Pro

Work with Reality Composer Pro content in Xcode

Apple documentation:

Designing RealityKit content with Reality Composer Pro

About Polyspatial

If you are coming at this from the HoloLens or Magic Leap, then you are probably more comfortable working in Unity. Unity projects can, under the right circumstances, deploy to the VisionOS Simulator. You should just need the VisionOS support packages to get this working. Polyspatial is a tool that allows you to convert Unity shaders and materials into VisionOS’s native shader framework — and I think this is only needed if you are building mixed reality apps, not for VR (fully immersive) apps.

In general, though, I think you are always better off going native for performance and features. While it may seem like you can use Unity Polyspatial to do in VisionOS everything you are used to doing on other AR platforms, these tools ultimately sit on top of RealityKit once they are deployed. So if what you are trying to do isn’t supported in RealityKit, I’m not sure how it would actually work.