Microsoft Bot Framework is an open source SDK and set of tools for developing chatbots. One of the advantages of building chatbots with the Bot Framework is that you can easily integrate your bot service with the powerful AI algorithms available through Azure Cognitive Services. This is a quick and easy way to give your chatbot super powers when you need them.

Microsoft Cognitive Services is an ever-growing collection of algorithms developed by experts in the fields of computer vision, speech, natural-language processing, decision assistance, and web search. The services simplify a variety of common AI-based tasks, which are then easily consumable through web APIs. The APIs are also constantly being improved and some are even able to teach themselves to be smarter based on the information you feed them.

Here is a quick highlight reel of some of the current Cognitive Services available to chatbot creators:

Language

People have a natural ability to say the same thing in many ways. Intelligent bots need to be just as flexible in understanding what human beings want. The Cognitive Service Language APIs provide language models to determine intent, so your bots can respond with the appropriate action.

The Language Understanding Service (LUIS) easily integrates with Azure Bot Service to provide natural language capabilities for your chatbot. Using LUIS, you can classify a speaker’s intents and perform entity extraction. For instance, if someone tells your bot that they want to buy tickets to Amsterdam, LUIS can help identify that the speaker intends to book a flight and that Amsterdam is a location entity for this utterance.

While LUIS offers prebuilt language models to help with natural language understanding, you can also customize these models for particular language domains that are pertinent to your needs. LUIS also supports active learning, allowing your models to get progressively better as more people communicate with it.

Decision assist services

Cognitive Services has knowledge APIs that extend your bot’s ability to make judgments. Where the language understanding service helps your chatbot determine a speaker’s intention, the decision services help your chatbot figure out the best way to respond. Personalizer, currently in preview, uses machine learning to provide the best results for your users. For instance Personalizer can make recommendations or rank a chatbot’s optional responses to select the best one. Additionally, the Content Moderator service helps identify offensive language, images, and video, filtering profanity and adult content.

Speech recognition and conversion

The Speech APIs in Cognitive Services can give your bot advanced speech skills that leverage industry-leading algorithms for speech-to-text and text-to-speech conversion, as well as Speaker Recognition, a service that lets people use their voice for verification. The Speech APIs use built-in language models that cover a wide range of scenarios with high accuracy.

For applications that require further customization, you can use the Custom Recognition Intelligent Service (CRIS). This allows you to calibrate the language and acoustic models of the speech recognizer by tailoring it to the vocabulary of the application and to the speaking style of your bot’s users. This service allows your chatbot to overcome common challenges to communication such as dialects, slang and even background noise. If you’ve ever wondered how to create a bot that understands the latest lingo, CRIS is the bot enhancement you’ve been looking for.

Web search

The Bing Search APIs add intelligent web search capabilities to your chatbots, effectively putting the internet’s vast knowledge at your bot’s fingertips. Your bot can access billions of:

· webpages

· images

· videos

· news

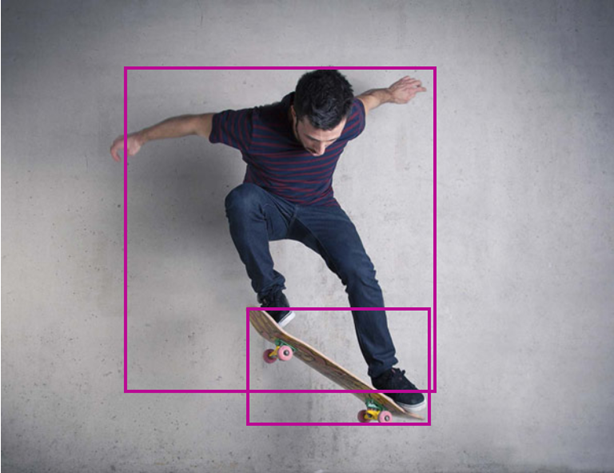

Image and video understanding

The Vision APIs bring advanced computer vision algorithms for both images and video to your bots. For example, you can use them to recognize objects, people’s faces, age, gender, or even feelings.

The Vision APIs support a variety of image-understanding features. They can categorize the content of images, determining if the setting is at the beach or at a wedding. They can perform optical character recognition on your photo, picking out road signs and other text. The Vision APIs also support several image and video-processing capabilities, such as intelligently generating image or video thumbnails, or stabilizing the output of a video for you.

Summary

While chatbots are already an amazing way to help people interact with complex data in a human-centric way, extending them with web-based AI is a clear opportunity to make them even better assistants for people. Easy to use AI algorithms like the ones in Microsoft Cognitive Services remove language friction and give your chatbots super powers.

[…] a Chatbot with Microsoft Azure QnA Maker and Alexa and Extending Chatbots with Azure Cognitive Services and Microsoft’s convergence of chatbots and mixed reality (James […]