In a recent talk (captured on Youtube) a Microsoft employee explained the difference between an “app” and an “experience” in this way: “We have divas in our development group and they want to make special names for things.” He expressed an opinion many developers in the Microsoft stack probably share but do not normally say out loud. These appear to be two terms for the same thing, to wit, a unit of executable code, yet some people use one and some people use the other. In fact, the choice of terms tends to reveal more about the people who are talking about the “unit of code” than about the code itself. To deepen the linguistic twists, we haven’t even always called apps “apps.” We used to call them “applications” and switched over to the abbreviated form, it appears, following the success of Apple’s “App Store” which contained “apps” rather than “applications.” There is even an obvious marketing connection between “Apple” and “app” which goes back at least as far as the mid-80’s.

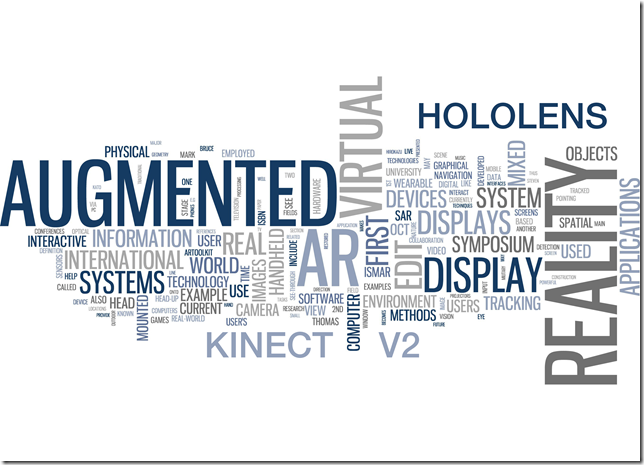

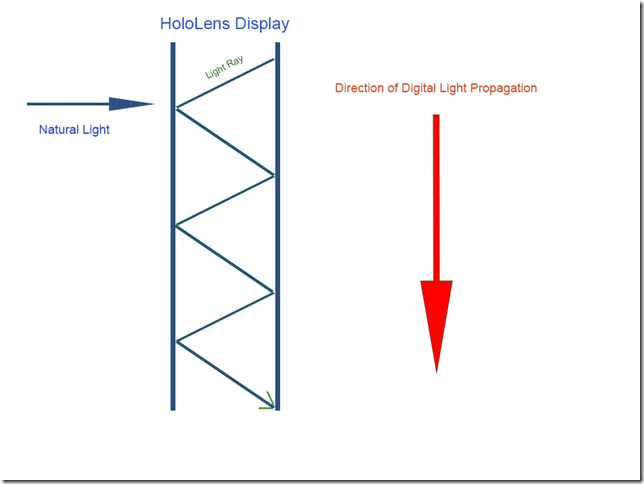

I am currently a Microsoft MVP in a sub-discipline of the Window Development group called “Emerging Experiences.” As an Emerging Experiences MVP for Microsoft, the distinction between “app” and “experience” is particularly poignant for me. The “emerging” aspect of our group’s name is fairly evident. EE MVPs specialize in technologies like the Kinect, Surface Hub and large screen devices, Augmented Reality devices, face recognition, Ink, wearables, and other More Personal Computing related capabilities. “Experiences” is problematic, however, because in using that term to describe our group, we basically raise a question about what the group is about. “Experiences” is a term that is not native to the Microsoft developer ecosystem but instead is transplanted from the agency and design world, much like the phrase “creative technologist” which is more or less interchangeable with “developer” but also describes a set of presuppositions, assumptions, and a background in agency life – in other words, it assumes a specific set of prior experiences.

As a Microsoft Emerging Experiences MVP, I have an inherent responsibility to explain what an “experience” is to the wider Microsoft ecosystem. If I am successful in this, you will start to see the appeal of this term also and will be able to use it in the appropriate situations. Rather than try to go at it head-on, though, I am going to do something the philosopher Daniel Dennett calls “nudging our intuitions.” I will take you through a series of examples and metaphors that will provide the necessary background for understanding how the term “experience” came about and why it is useful.

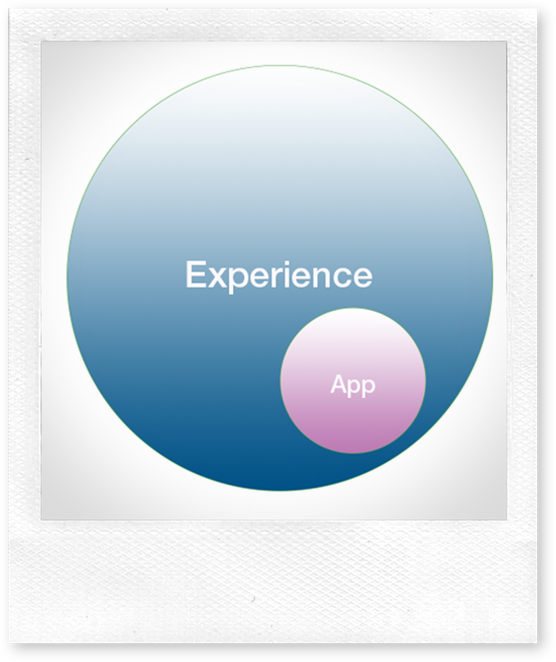

The obvious thing to do at this point is to show how these two terms overlap and diverge with a convenient Venn diagram. As you can see from the diagram above, however, all apps are automatically experiences, which is why “experience” can always be substituted for “app.” The converse, however, does not hold. Not all experiences are apps.

Consider the twitter wall created for a conference a few years ago that was distinctively non-digital. Although this wall did use the twitter API, it involved many volunteers hand-writing tweets that included hashtags about the conference onto post-it notes and then sticking them along one of the conference hallways. Conceptually this is a “thing” that takes advantage of a social networking phenomenon that is less than a decade old. While the twitter API sits at the heart of it, the interface with the API is completely manual. Volunteers are required to evaluate and cull the twitter data stream for relevant information. The display technology is also manual and relies on weak adhesive and paper, an invention from the mid-70’s. Commonly we would write an app to perform this process of filtering and displaying tweets, but that’s not what the artists involved did. Since there was no coding involved, it does not make sense to call the whole thing an app. It is a quintessential “experience.” The postit twitter wall provides us with a provisional understanding of the difference between an app and an experience. An app involves code, while an experience can involve elements beyond code writing.

Why do we go to movie theaters when we can often stay at home and enjoy the same movie? This has become a major problem for the film industry and movie theaters in general. Theater marketing over the past decade or so have responded by talking about the movie-going experience. What is this? At a technology level, the movie-going experience involves larger screens and high-end audio equipment. At an emotional level, however, it also includes sticky floors, fighting with a neighbor for the arm-rest, buying candy and an oversized soda, watching previews and smelling buttered popcorn. All of these things, especially the smell of popcorn which has a peculiar ability to evoke memories, remind us of past enjoyable experience that in many cases we shared with friends and family members.

If you go to the movies these days, you will have noticed a campaign against men in hoodies video-taping movies. This, in fact, isn’t an ad meant to discourage you from pulling out your smart phone and recording the movie you are about to watch. That would be rather silly. Instead, it’s an attempt to surface all those good, communal feelings you have about going to the movies and subtly distinguishing them – sub rasa, in your mind — from bad feelings about downloading movies from the Internet over peer-to-peer networks, which the ad associates with unsociable and creepy behavior. The former is a cluster of good experiences while the latter is presented as a cluster of bad experiences while cleverly never accusing you, personally, of improper behavior. In the best marketing form, it goes after the pusher rather than the user.

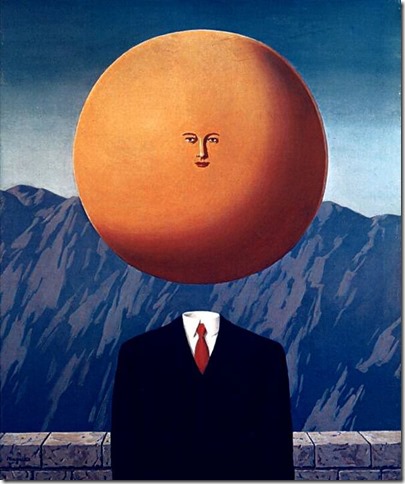

Returning to those happy experiences, it’s clear that an experience goes well beyond what we see in the foreground – in this case, the movie itself. A movie-going experience is mostly about other things than the movie. It’s about all the background activities we associate with movie-going as well as memories and memories of memories that are evoked when we think about going to the movies.

We can think about the relationship between an app and an experience in a similar way. When we speak about an app, we typically think of two things: the purpose of the app and the code used to implement it. When we talk about an experience, we include these but also forecasts and expectations about how a user will feel about the app, the ease with which they’ll use it, what other apps they will associate with this app and so on. In this sense, an experience is always a user experience because it concerns the app’s relationship to a user. Stripped down, however, there is also a pure app which involves the app’s code, its performance, its maintainability, and its extensibility. The inner app is isolated, for the most part, from concerns about how the intended user will use it.

At this point we are about at the place in this dialectic where we can differentiate experiences as something designers do and apps as something that developers make. While this is another good provisional explanation, it misses a bigger underlying truth: all apps are also experiences, whether you plan them to be or not. Just because you don’t have a designer on your project doesn’t mean users won’t be involved in judging it at some point. Even by not investing in any sort of overall design, you have sent out a message about what you are focused on and what you are not. The great exemplary of this, an actual exception that proves the rule, is the Google homepage. A lot of designer thinking has gone into making the Google homepage as simple and artless as possible in order to create a humble user experience. It is intentionally unpresumptuous.

What this tells us is that a pure app will always be an abstraction of sorts. You can ignore the overall impression that your app leaves the user with, but you can’t actually remove the user’s experience of your app. The experience of the app, at this provisional stage of explanation, is the real thing, while the notion of an app is an abstraction we perform in order to concentrate on the task of coding the experience. In turn, all the code that makes the app possible is something that will never be seen or recognized by the user. The user only knows about the experience. They will only ever be aware of the app itself when something breaks or fails to work.

What then do we sell in an app store?

We don’t sell apps in an App Store any more than we sell windows in the Windows Store. We sell experiences that rely heavily on first impressions in order to grab people’s attentions. This means the iconography, description and ultimately the reviews are the most important thing that go into making experiences in an app store successful. Given that users have limited time to devote to learning your experience, making the purpose of the app self-evident and making it easy to use and master are two additional concerns. If you are fortunate, you will have a UX person on hand to help you with making the application easy to use as well as a visual designer to make it attractive and a product designer or creative director to make the overall experience attractive.

Which gets us to a penultimate, if still provisional, understanding of the difference between an “app” and an “experience.” These are two ways of looking at the same thing and delineate two different roles in the creation of an application, one technical and one creative. The coder on an application development team will need to primarily be concerned with making [a thing] work while the creative lead will be primarily concerned with determining what [it] needs to do.

There’s one final problem with this explanation, however. It requires a full team where the coding role and the various design roles (creative lead, user experience designer, interactive designer, audio designer, etc.) are clearly delineated. Unless you are already working in an agency setting or at least a mid-sized gaming company, the roles are likely going to be much more blurred. In which case, thinking about “apps” is an artificial luxury while thinking about “experiences” becomes everyone’s responsibility. If you are working on a very small team of one to four people, then the problem is exacerbated. On a small team, no one has the time to worry about “apps.” Everyone has to worry about the bigger picture.

Everyone except the user, of course. The user should only be concerned with things they can buy in an app store with a touch of the thumb. The user shouldn’t know anything about experiences. The user should never wonder about who designed the Google homepage. The user shouldn’t be tasked with any of these concerns because the developers of a good experience have already thought this all out ahead of time.

So here’s the final, no longer provisional explanation of the difference between an app and an experience. An app is for users; an experience is something makers make for users.

This has a natural corollary: if as a maker you think in terms of apps rather than experiences, then you are thinking too narrowly. You can call it whatever you want, though.

I wrote earlier in this article that the term emerging experiences constantly requires explanation and clarification. The truth, however, is that “experience” isn’t really the thing that requires explanation – though it’s fun to do so. “Emerging” is actually the difficult concept in that dyad. What counts as emerging is constantly changing – today it is virtual reality head-mounted displays. A few years ago, smart phones were considered emerging but today they are simply the places where we keep our apps. TVs were once considered emerging, and before that radios. If we go back far enough, even books were once considered an emerging technology.

“Emerging” is a descriptor for a certain feeling of butterflies in the stomach about technology and a contagious giddy excitement about new things. It’s like the new car smell that captures a sense of pure potential, just before what is emerging becomes disappointing, then re-evaluated, then old hat and boring. The sense of the emerging is that thrill holding back fear which children experience when their fathers toss them into the air; for a single moment, they are suspended between rising and falling, and with eyes wide open they have the opportunity to take in the world around them.

I love the emerging experience.