I’m currently sitting in my room at the L.A. Grand Hotel waiting for the L.E.A.P. conference to start. I’ve been holding off on this comparison post because I had promised Dennis Vroegop I would give it first as a talk at the Techorama Netherlands conference – which I did last week. I will do a feature comparison based on publicly available information, then highlight features unique to the Magic Leap, and then distinguish subtle but important differences that only become apparent from spending months with these devices at the developer level. Finally I want to point out design improvements in the Magic Leap that are so good for Mixed Reality that I predict they will be incorporated into the next version of HoloLens.

Keep in mind that this is a comparison of two different generations of devices. The Magic Leap One is coming out two years after the HoloLens and would be expected to be better. At the same time, the HoloLens v2 is being released some time in 2019 and can be expected to be better still.

1. Field of View

In raw numbers, the field of view of the Magic Leap One is approximately 25% better than the HoloLens. The HoloLens field of view is estimated to be about 29-30 degrees wide and 17 degrees high. The Magic Leap One is 40 degrees wide by 30 degrees high. There is a corresponding difference in resolution, with the HoloLens offering 1268 by 720 per eye and the Magic Leap One providing 1280 by 960 per eye.

The Magic Leap One uses the same wave guide display technology that the HoloLens does, however, so how did they pump up the FOV? First, the ML1 has a more powerful battery than the HoloLens does, and it’s often been claimed by Microsoft that FOV is largely dependent on the power of the projection. This is probably offset, though, by the fact that the ML1 is using more power to project in two planes instead of only one like the HoloLens does (with 6 Waveguide layers compared to 4 in the HoloLens).

Another trick is that the waveguides in the Magic Leap are closer to the wearer’s eyes than they are in the HoloLens. As a consequence, you can wear glasses underneath the HoloLens while you cannot do so comfortably under the Magic Leap device.

In addition to this, Jasper Brekelmans and Dennis Vroegop suggested over coffees along the Amstel River (in a conversation about David Copperfield) that because one’s peripheral vision is closed off in the ML1, the perceived FOV may be even larger than the actual. The theory behind this is that, due to the widespread use of glasses, we have become used to not paying attention to our peripheral vision so much and consequently are comfortable with this tunneling of our vision.

Blocking off the peripheral field of view might cause issues in certain industrial settings, but the general effect is that what you can see as a proportion of your overall FOV is much larger in the ML1 than it is in the HoloLens. Or another way of putting this is that the empty areas of your FOV, as a proportion of your available FOV, is much smaller than it is in the HoloLens.

On top of this, the aspect ratio of the FOV in the ML1 is much taller than in the HoloLens, which may end up doing a better job at accommodating vertical seccaddic movements of the eyes.

Because of the narrower gap between the device and the wearer’s eyes, the Magic Leap can’t accommodate glasses as the Hololens can. To compensate, Magic Leap is developing relationships with online eyeglass manufacturers to provide prescription inserts that can be placed in front of the waveguides and magnetically lock into place. There’s some controversy over whether this is a good or a bad thing. Some developers have expressed concern that this will make demoing Magic Leap at events more difficult than demoing HoloLens, since those with poor vision will either not be able to participate or, alternatively, we will be forced to carry around a large suitcase of prescription inserts to every event.

On the other hand, when I think of what MR will be like in the future, I tend to think of them resembling real glasses (and not electronic contacts, which simply scare me). When they reach the size and ubiquity of modern glasses, it will make sense for each person to have their own personalized device with their appropriate prescription. Magic Leap is on the right track in this case. It’s just in the intervening period that we have to figure out how to share our limited, expensive devices with others.

| HoloLens v1 | Magic Leap One | |

| Price | $3000 – $5000 | $2300 |

| OS | Windows | Android variant |

| Field of View | ~30 deg x 17 deg | 40 deg x 30 deg |

| Resolution | 1268 x 720 per eye | 1280 x 960 per eye |

| Depth Sensor | Time of Flight | Time of Flight |

| Display Type | Wave Guide | Wave Guide |

| Hand Gestures Recognized | 2 | 9 |

| Underlying comic book technology | Light Engines | Light Fields |

| Controller | Click | 6 DOF |

| Hand tracking | limited | fingers (3 joints each) |

| Processing unit | Above nose | Light pack |

| Audio | Spatial Sound | Spatial Sound |

2. Hardware Specs (It’s all about the battery)

| HoloLens v1 | Magic Leap One |

| Intel Atom x5-Z8100 1.04 GHz Intel Airmont (14nm) 4 Logical Processors 64-bit capable |

NVIDIA® Tegra X2 SOC 2 Denver 2.0 64-bit cores + 4 ARM Cortex A57 64-bit cores (2 A57’s and 1 Denver accessible to applications) |

| 8086h (Intel) | GPU. NVIDIA Pascal™, 256 CUDA cores; Graphic APIs: OpenGL 4.5, Vulkan, OpenGL ES 3.3+ |

| 2GB RAM | 8GB RAM |

| 64GB Storage | 128GB Storage |

The Magic Leap One is overall a much beefier machine than the current HoloLens. While both the HoloLens and the Magic Leap One advertise a 3 hour battery life, these can mean vastly different things. In order to drive all of its extra hardware, the Magic Leap One needs a much beefier battery. The ML1 is powered by a twin-cell battery with 36.77 Wh, running at 3.83 V. The HoloLens has a 1.65 Wh battery.

For overall performance, the larger battery means the world meshes (i.e. surface reconstruction, world mapping) are much denser and more frequently updated on the Magic Leap than on the HoloLens. The Time-of-Flight depth camera can fire off more frequently and for longer periods.

The larger battery and beefier specs also translate to much better 3D performance. The HoloLens is able to run 30,000 polygons at 60 fps. Beyond that, the fps begins to drop. The Magic Leap runs upwards of 1 million polygons at 60 fps.

On the downside, that more powerful battery rig needs a fan to cool it whereas the HoloLens is passively cooled. In laboratory and medical scenarios where a sterile environment must be maintained, active cooling with a fan could be a problem.

3. The HoloLens and Tracking

The HoloLens uses 4 monochrome cameras (“environment aware sensors”), an accelerometer, magnetometer and gyroscope in a sensor fusion configuration, and a custom HPU to perform head tracking. The Magic Leap one has a similar setup minus the HPU.

The HoloLens tracking is still somewhat better than the ML1’s. It loses tracking less frequently and digital content is less jittery when seen up close or while the wearer is in motion.

Overall, though, tracking performance is fairly close between the two devices.

4. Magic Leap Extras

The ML1 has a couple of features that are simply outside of the box. One is the eye tracking. There are inward facing cameras that track the wearer’s eye movements as invisible IR flashes.

The tracking is not continuous and is captured at a much lower resolution level than the displays. While they shouldn’t be used for direct user interactions, they are great for providing context for other interactions. It would be great if someone would write a keyboard that uses eye tracking to select keys. In the meantime, I wrote this heat vision demo that uses eye tracking to burn the walls of my house — I think of it as “Superman with a Migraine”. Note the eye-blink tracking.

The other cool extra in the Magic Leap is two planes of focus. Most VR devices have a single plane of focus at infinity. The HoloLens has a single plane of focus set at two meters.

In the magic leap one, when you look at near objects, objects further away (on the outer plane) seem to go out of focus. When you look at objects close up, the objects further away go out of focus. I would guess that the close plane is around a meter and the out one about 3 meters but I’m not really sure. In the Lumin OS .91, there is also a sporadic green shift in the near plane (which I expect will be fixed soon).

5. The Tether

The Magic Leap One is made up of two parts: the Light Pack and the Light Wear. They are connected by a cable. The Light Wear contains all the sensors, projectors and displays while the Light Wear, worn at the hip, contains all the computer bits and the battery.

This is an engineering choice that allows for a much larger power source. Without the tether solution, a large battery would not be possible. Without the large battery, the ML1’s enhanced depth sensing, improved graphics processing and larger field of view would not be possible.

In addition, this design makes the Magic Leap a much more comfortable fit on the head. The weight distribution is better than on the HoloLens, it is lighter, and it doesn’t require extra straps.

The tether solution is actually so effective that I would be surprised if the HoloLens v2 does not follow a similar design. The original one-piece “tetherless” solution Microsoft came up with for the HoloLens was visionary, but severely limiting.

6. Developing

If you have ever developed in Unity for the Android (or really any other device) then you know how to develop for Magic Leap in Unity. You press a button and your app compiles to an .mpk image (Android uses “.apk” file extensions). If your device is attached, you can deploy directly by clicking on “build and run”.

Magic Leap apps can also be built with the Unreal Engine.

HoloLens apps run on a Unity player sandboxed in a UWP app. The development cycle consequently involves exporting your HoloLens app as a Visual Studio project targeting UWP and then building and deploying in UWP. In general (and it may just be me) this has been tedious.

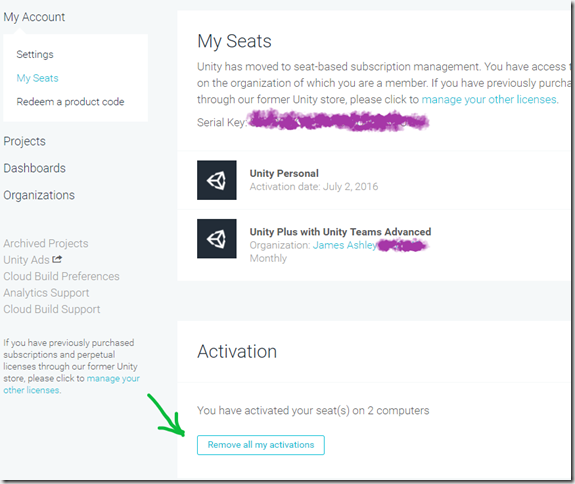

It became even worse when the immersive WinMR devices (or occluded WinMR – basically Microsoft VR) devices came out last year and the basic tools used for HoloLens development, known as the HoloLens Toolkit and then the Mixed Reality Toolkit, was expanded to supported both kinds of device. Because of some issues with Unity, building for WinMR required certain versions of Unity and above while developing for HoloLens required certain versions of Unity and below. And this state went on for several months to the point that finding the correct Windows SDK paired with the right MRTK version paired with the correct Unity version became a closely kept alchemical formula passed from developer to developer.

This experience may not be the same for everyone but it left me a bit traumatized. By contrast, Magic Leap development is simply a pleasure. I can build and see the results very quickly in my device. I can wear the device for hours at a time. I typically only stop development when the ML battery runs down and I have to let it recharge. I don’t have a Magic Leap Hub, which would allow me to charge while I dev, but I intend to get one.

The Magic Leap toolkit is still not quite as capable as the open source Mixed Reality Toolkit managed by Stephen Hodgson and others.

The Magic Leap also has a simulator rather than an emulator for developing without a device. This actually makes sense since the Hololens emulator runs the HoloLens OS in a virtual machine, which might be tricky given the much larger specs of the Magic Leap.

7. Interactions

The Magic Leap supports robust hand and gesture tracking as well as a 6DOF controller. The DOF in 6DOF stands for degrees of freedom. We know not only the direction the controller is pointing in (3DOF) but also its position.

I love the controller. I love it so much it made me finally admit to myself that I hate the HoloLens tap gesture. No one ever gets it right. It’s awkward. It’s uncomfortable and makes me feel like I’m performing a kung fu move.

By contrast, a controller just makes sense. The UX for MR, I believe, should always support three layers of interactions. Mixed reality UX should support hand gestures for ease of use. It should fall back to the controller for precision movements. It should finally fall back on the delta pad on the controller for accessibility.

For all of my antipathy toward the HoloLens tap, however, I have to say I miss the HoloLens bloom gesture (escape), which I keep trying to use in Magic Leap to no avail. Instead, in Magic Leap holding the controller’s Home button for three seconds is the escape gesture, which I don’t really like. It also bothers me that hand gestures aren’t supported in the core desktop (the Icon grid) – but this is still the Creator’s Edition (translation: dev edition) after all.

[Late edit thanks to SH: it should also be pointed out that the Lumin OS (the desktop layer) currently doesn’t support hand gestures, which I find baffling. For now, you can’t get past the login and other initial screens without a paired phone or a controller.]

Summing Up

So is the Magic Leap One better than the HoloLens v1? Oh yes. By leaps and bounds.

1. The development workflow is much more straight forward and pleasant.

2. The increased battery size and beefier hardware makes it possible to do things, performance wise, that the HoloLens tended to stop us from doing. Phone and tablet level experiences are doable now.

3. The Magic Leap One has a much better interaction model than the HoloLens does. How did anyone ever do MR without a controller? (Actually, everyone used an XBox controller in the end in order to get any sort of real work done, but we don’t talk about that much.)

Is it time to jump back into Mixed Reality development?

If you spent $3.2K to $5K for a HoloLens, then you owe it to yourself to spend $2,300 for a Magic Leap. It’s the device you originally wanted. The HoloLens was a brilliant device back in 2016 and really the first of its kind, but it had limitations. Many of the projects you were never able to realize in HoloLens (in the small dev community that developed around HoloLens, we all know what these are) are now doable with the improved Magic Leap specs. Additionally, your enterprise stories are much easier to sell with the controller. Instead of spending 5 minutes of your precious pitch time explaining how tap works, you can now just let your potential investors and clients go straight into the demo with a controller they basically already know how to use.

Is there a future in spatial computing?

Now there is. There was a brief pause between 2016 and the middle of 2018, but we currently have two great devices available with another shoe dropping soon. Microsoft will be coming out with a HoloLens v2 sometime in the first half of 2019 which I would predict will implement the tethered design Magic Leap is using. This will be an improvement over the current Magic Leap which in turn will be driven to improve its own tech.

Microsoft has an advantage because it started this journey back in the Kinect days and has the resources of Microsoft Research to draw on. Magic Leap has an advantage because, well, they aren’t Microsoft and don’t face the internal political problems a large tech giant does (though no doubt they have their own). More importantly, they have their own U.S.-based production lines (as well as production lines in Mexico) and are less reliant on China, which hopefully means they are capable of much quicker turn-arounds and initial SKU production.

When do we get smaller devices that wear like glasses?

I have no idea, but try to think in terms of 3, 5, 10 years. We always overestimate what can be done in 3 years but always underestimate how much things will change in 10. Somewhere in the middle, we will intersect with our MR futures.

Your comments, corrections and criticisms are welcome in the comments below. I’ll try to keep up with them and incorporate what you say into the main article as appropriate.