Warning: there is a bit of profanity. I’m warning you because profanity is obviously worse than being compared to Adolf Hitler. On the other hand, sometimes dirty words can be as funny as logical fallacies — and so can Hitler.

Category: Theater of the Absurd

Slouching Towards The Singularity

This has been a whirlwind week at Magenic. Monday night Rocky Lhotka (the ‘h’ is silent and the ‘o’ is short, by the way), a Magenic evangelist and Microsoft Regional Director came into town and presented at the Atlanta .NET User’s Group. The developers at Magenic got a chance to throw questions at him before the event, and then got another chance to hear him at the User’s Group. The ANUG presentation was extremely good, first of all because Rocky didn’t use his power point presentation as a crutch, but rather had a complete presentation in his head for which the MPP slides — and later the code snippets (see Coding is not a Spectator Sport) — were merely illustrative. Second, in talking about how to put together an N-Layer application, he gave a great ten year history of application development, and the ways in which we continue to subscribe to architectures that were intended to solve yesterday’s problems. He spoke about N-Layer applications instead of N-Tier (even though it doesn’t roll off the tongue nearly as well) in order to emphasize the point that, unlike the in COM+ days, these do not always mean the same thing, and in fact all the gains we used to ascribe to N-Tier application — and the reason we always tried so hard to get it onto our resumes — can in fact be accomplished with a logical, rather than a physical, decoupling of the application stack. One part of the talk was actually interactive, as we tried to remember why we used to try to implement solutions using Microsoft Transaction Server and later COM+. It was because that was the only way we used to be able to get an application to scale, since the DBMS in a client-server app could typically only serve about 30 concurrent users. But back then, of course, we were running SQL Server 7 (after some polling, we audience agreed it was actually SQL Server 6.5 back in ’97) on a box with a Pentium 4 (after some polling, the audience concluded that it was a Pentium II) with 2 Gigs (it turned out to be 1 Gig or less) of RAM. In ten years time, the hardware and software for databases have improved dramatically, and so the case for an N-tier architecture (as opposed to an N-Layer architecture) in which we use two different servers in order to access data simply is not there any more. This is one example of how we continue to build applications to solve yesterday’s problems.

The reason we do this, of course, is that the technology is moving too fast to keep up with. As developers, we generally work with rules of thumb — which we then turn around and call best practices — the reasons for which are unclear to us, or simply become forgotten. Rocky is remarkable in being able to recall that history — and perhaps even for thinking of it as something worth remembering — and so is able to provide an interesting perspective on our tiger ride. But of course it is only going to get worse.

This is the premise of Vernor Vinge’s concept of The Singularity. Based loosely on Moore’s Law, Vinge (pronounced Vin-Jee) proposed that our ability to predict future technologies will collapse over time, so that if we could, say, predict technological innovation ten years in the future 50 years ago, today our prescience only extends some five years into the future. We are working, then, toward some moment in which our ability to predict technological progress will be so short that it is practically nothing. We won’t be able to tell what comes next. This will turn out to be a sort of secular chilianism in which AI’s happen, nanotechnology becomes commonplace, and many of the other plot devices of science fiction (excluding the ones that turn out to be physically impossible) become realities. The Singularity is essentially progress on speed.

There was some good chatting around after the user group and I got a chance to ask Jim Wooley his opinion of LINQ to SQL vs Entity Framework vs Astoria, and he gave some eyebrow raising answers which I can’t actually blog about because of various NDA’s with Microsoft and my word to Jim that I wouldn’t breath a word of what I’d heard (actually, I’m just trying to sound important — I actually can’t remember exactly what he told me, except that it was very interesting and came down to that old saw, ‘all politics are local’).

Tuesday was the Microsoft 2008 Launch Event, subtitled "Heroes Happen Here" (who comes up with this stuff?). I worked the Magenic kiosk, which turned out (from everything I heard) to be much more interesting that the talks. I got a chance to meet with lots of developers and found out what people are building ‘out there’. Turner Broadcasting just released a major internal app called Traffic, and is in the midst of implementing standards for WCF across the company. Matria Healthcare is looking at putting in an SOA infrastructure for their healthcare line of products. CCF – White Wolf, soon to be simply World of Darkness, apparently has the world’s largest SQL Server cluster with 120 blades servicing their Eve Online customers, and is preparing to release a new web site sometime next year for the World of Darkness line, with the possibility of using Silverlight in their storefront application. In short, lots of people are doing lots of cool things. I also finally got the chance to meet Bill Ryan at the launch, and he was as cool and as technically competent as I had imagined.

Tuesday night Rocky presented on WPF and Silverlight at the monthly Magenic tech night. As far as I know, these are Magenic only events, which is a shame because lots of interesting and blunt questions get asked — due in some part to the free flowing beer. Afterwards we stayed up playing Rock Band on the XBOX 360. Realizing that we didn’t particularly want to do the first set of songs, various people fired up their browsers to find the cheat codes so we could unlock the advanced songs, and we finished the night with Rocky singing Rush and Metallica. In all fairness, no one can really do Tom Sawyer justice. Rocky’s rendition of Enter Sandman, on the other hand, was uncanny.

Wednesday was a sales call with Rocky in the morning, though pretty much I felt like a third wheel and spent my time drinking the client’s coffee and listening to Rocky explain things slightly over my head, followed by technical interviews at the Magenic offices. Basically a full week, and I’m only now getting back to my Silverlight in Seven Days experiment — will I ever finish it?

Before he left, I did get a chance to ask Rocky the question I’ve always wanted to ask him. By chance I’ve run into lots of smart people over the years — in philosophy, in government, and in technology — and I always work my way up to asking them this one thing. Typically they ignore me or they change the subject. Rocky was kind enough to let me complete my question at least, so I did. I asked him if he thought I should make my retirement plans around the prospect of The Singularity. Sadly he laughed, but at least he laughed heartily, and we moved on to talking about the works of Louis L’Amour.

Christmas Tree Blues

Every family has its peculiar Christmas traditions. My family’s holiday traditions are strongly influenced by a linguistic dispute back in A.D. 1054, one consequence of which is that we celebrate Christmas on January 7th, 13 days after almost everyone else we know does so. This has its virtues and its vices. One of the vices is that we clean up on holiday shopping, since we are afforded an extra 13 days to pick up last minute presents, which gets us well into the time zone of post-holiday sales. Another is that we always wait until a period somewhere between December 23rd and December 26th to buy our Christmas tree. We typically are able to pick up our trees for a song, and last year were even able to get a tall frosty spruce without even singing.

This history of vice has finally caught up with us, for this year, as we stalked forlornly through the suburbs of Atlanta, no Christmas trees were to be found. Lacking foresight or preparation, we have found ourselves in the midst of a cut-tree shortage. And what is a belated Christmas without a cut-tree shedding in the living room?

We are now in the position of pondering the unthinkable. Should we purchase an artificial tree this year (currently fifty-percent off at Target)? The thought fills us with a certain degree of inexplicable horror. Perhaps this is owing to an uncanny wariness about the prospects of surrendering to technology, in some way. While not tree-huggers, as such, we have a fondness for natural beauty, and there are few things so beautiful as a tree pruned over a year to produce the correct aesthetic form, then cut down, transported, and eventually deposited in one’s living room where it is affectionately adorned with trinkets and lighting.

Another potential source for the uneasiness my wife and I are experiencing is an association of these ersatz arboreals with memories of our childhoods in the late 70’s and early 80’s, which are festooned with cigarette smoke, various kinds of loaf for dinner, checkered suits, polyester shirts and, of course, artificial trees. Is this the kind of life we want for our own children?

In the end, we have opted to get a three-foot, bright pink, pre-lit artificial tree. Our thinking is that this tree will not offend so greatly if it knows its place and does not put forward pretensions of being real.

The linguistic ambiguity alluded to above has led to other traditions. For instance, in Appalachia there are still people who cleave to the custom that on the midnight before January 6th, animals participate in a miracle in which they all hold concourse. Briefly granted the opportunity to speak, all creatures great and small can be heard praying quietly and, one would imagine, discussing the events of the past year. The significance of January 6th comes from the fact that the Church of England was late to adopt the Gregorian calendar, which changed the way leap years are calculated, and consequently so was America late. Thus at the time that the Appalachian Mountains were first settled by English emigrants, the discrepancy between the Gregorian calendar and the Julian calendar was about 12 days (the gap, as mentioned above, has grown to 13 days in recent years). The mystery of the talking animals revolves around a holiday that was once celebrated on January 6th , but is celebrated no more — that is, Christmas.

According to this site, there is a similar tradition in Italy, itself. On the day of the Epiphany, which commemorates the day the three magi brought gifts to the baby Jesus, the animals speak.

Italians believe that animals can talk on the night of Epiphany so owners feed them well. Fountains and rivers in Calabria run with olive oil and wine and everything turns briefly into something to eat: the walls into ricotta, the bedposts into sausages, and the sheets into lasagna.

The Epiphany is celebrated in Rome on January 6th of the Gregorian calendar. It is possible, however, that even in Italy, older traditions have persisted under a different guise, and that the traditions of Old Christmas (as it is called in Appalachia) have simply refused to migrate 12 days back into December, and are now celebrated under a new name. Such is the way that linguistic ambiguities give rise to ambiguities in custom, and ambiguities in custom give rise to anxiety over what to display in one’s living room, and when.

Taliesin’s Riddle

As translated by Lady Charlotte Guest, excerpted from Robert Graves’s The White Goddess: A Historical Grammar of Poetic Myth:

Discover what it is:

The strong creature from before the Flood

Without flesh, without bone,

Without vein, without blood,

Without head, without feet …

In field, in forest…

Without hand, without foot.

It is also as wide

As the surface of the earth,

And it was not born,

Nor was it seen …

[Answer: Ventus]

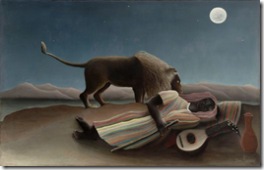

Gypsy Groove

Why are gypsies commonly believed to be able to foretell the future?

This is the question that sets Pierce Moffett, the hero of John Crowley’s novel Aegypt, in search of the true origin of the Roma, and with it the true meaning of history. In his novels about Moffett, Crowley unravels a world in which history not only can be broken into different periods (The Dark Ages, The Renaissance, The Enlightenment, et. al.), but in which the rules by which the world works shifts and ruptures between these eras.

And there are in fact people who believe such things. A friend once told me about a history professor of his who lectured on the prevalence of sightings of angels and spirits in Medieval and Renaissance literature, and questioned how so many people could believe such things. His radical conclusion, which he believed irrefutable, was that such things must have once been real.

John Crowley himself is a skeptic on such matters, as people often are who play too much at pretending to believe. At a certain point, one must choose either to cross the line into a possible madness, or draw back into a more definite epoche — a suspension of belief.

We, who have not stepped so deeply into these mysteries, are in a safer position to toy with possibilities, engaging in what Coleridge called the suspension of disbelief. It is what allows us to understand and even share in Don Quixote’s delusions, without thereby succumbing to them ourselves.

When I lived in Prague a few years back, there were stories about a gypsy bar on the edge of the city where one could meet gypsy princes and sip absinthe, a concoction that is illegal in much of the developed world. I never went of course, partly from a lack of courage, and partly out of a fear that confronting the thing itself would dispel for me the image I had already formed in my imagination of that magic place.

Over the years, I’ve privately enjoyed several fantasies about that bar. In some of these, I drink the absinthe and am immediately transformed, through a sudden revelation, into a passionate artist who spends the rest of his life trying to paint something he cannot quite capture. In others, I am accosted by a gypsy prince, and, defeating him in a bloody duel with knives, I leave with his gypsy bride. In most, I simply am kidnapped by the gypsies while in the grips of an absinthe induced haze, and eventually learn their ways and join their tribe, traveling across Europe stealing from the rich and conning the gullible.

Such is my secret life, for what it is worth. Inspired by this secret dream to be a gypsy, I have been listening to an album called Gypsy Groove, groove being the postpositional modifier that does in the 21st century what hooked-on did for us in the 20th. The copywriting claims it is “a collection of Balkan beats, gypsy jams and other treats from the leaders of this vibrant music scene.” I prefer the way my son (a natural poet, I believe) describes it: “it makes my ears sing and my butt dance.” I am especially fond of the song Zsa Manca, by the Czech group !DelaDap.

According to Pierce Moffett, the gypsies are refugees from a time that no longer exists, from a country called, not Egypt, but rather its mythical homophone, Aegypt. In order to explain this strange origin, Crowley builds a fictional story on top of a true story about how scholars in the Renaissance mistakenly projected the origins of certain texts, already ancient in their own time, to a pre-history even more ancient, and a provenance somewhere in the geographical Egypt.

If you don’t already know this story (either the false one or the real one), and you have the patience for it, here is John Crowley’s explanation of both, from Love & Sleep:

It was all true: there really had once been a country of wise priests whose magic worked, encoded in the picture-language of hieroglyphics. That was what Pierce had learned in his recent researches. It did lie far in the past, though not in the past of Egypt. It had been constructed long after the actual Egypt had declined and been buried, its mouth stopped because its language could no longer be read.

And this magic Egypt really had been discovered or invented in Alexandria around the time of the Christianization of the Roman Empire, when a Greek-speaking theosophical cult had attributed some mystical writings of their own to ancient priests of an imaginary Egyptian past of temples and speaking statues, when the gods dwelt with men. And then that imagined country really had disappeared again as those writings were lost in the course of the Christian centuries that followed.

And when they were rediscovered — during the Renaissance in Italy, along with an entire lost past — scholars believed them to be really as ancient as they purported to be. And so a new Egypt, twice different from the original, had appeared: ancient source of knowledge, older than Moses, inspiring a wild syncretism of sun-worship, obelisks, pseudo-hieroglyphs, magic and semi-Christian mysticism, which may have powered that knowledge revolution called science, the same science that would eventually discredit imaginary Egypt and its magic.

And yet even when the real Egypt had come to light again, the tombs broken open and the language read, the other country had persisted, though becoming only a story, a story Pierce had come upon in his boyhood and later forgot, the country he had rediscovered in the City in the days of the great Parade, when he had set out to learn the hidden history of the universe: the story he was still inside of, it seemed, inescapably.

Aegypt.

Just this year, John Crowley finally published the fourth book in his Aegypt Quartet, bringing the series to a close. It is called Endless Sleep.

Et in Alisiia Ego

A city seems like an awfully big thing to lose, and yet this occurs from time to time. Some people search for cities that simply do not exist, like Shangri-la and El Dorado. Some lost cities are transformed through legend and art into something else, so that the historical location is something different from the place we long to see. Such is the case with places like Xanadu and Arcadia. Camelot and Atlantis, on the other hand, fall somewhere in between, due to their tenuous connection to any sort of physical reality. Our main evidence that a place called Atlantis ever existed, and later fell into the sea, comes from Plato’s account in the Timaeus, yet even at the time Plato wrote this, it already had a legendary quality about it.

….[A]nd there was an island situated in front of the straits which are by you called the Pillars of Heracles; the island was larger than Libya and Asia put together, and was the way to other islands, and from these you might pass to the whole of the opposite continent which surrounded the true ocean; for this sea which is within the Straits of Heracles is only a harbour, having a narrow entrance, but that other is a real sea, and the surrounding land may be most truly called a boundless continent. Now in this island of Atlantis there was a great and wonderful empire which had rule over the whole island and several others, and over parts of the continent, and, furthermore, the men of Atlantis had subjected the parts of Libya within the columns of Heracles as far as Egypt, and of Europe as far as Tyrrhenia … But afterwards there occurred violent earthquakes and floods; and in a single day and night of misfortune … the island of Atlantis … disappeared in the depths of the sea.

— Timaeus

Camelot and Avalon, for reasons I don’t particularly understand, are alternately identified with Glastonbury, though there are also nay-sayers, of course. Then there are cities like Troy, Carthage and Petra, which may have been legend but which we now know to have been real, if only because we have rediscovered them. The locations of these cities became forgotten over time because of wars and mass migrations, sand storms and decay. They were lost, as it were, through carelessness.

But is it possible to lose a city on purpose? The lost city of Alesia was the site of Vercingetorix’s defeat at the hands of Julius Ceasar, which marked the end of Gallic Wars. The failure of the Roman Senate to grant Caesar a triumph to honor his victory led to his decision to initiate the Roman Civil War, leading in turn to the end of the Republic and his own reign of power, which only later came to an abrupt end when he was assassinated by, among others, Brutus, his friend and one of his lieutenants at the Battle of Alesia. Having achieved mastery of Rome in 46 BCE, Caesar finally was able to throw himself the triumph he wanted, which culminated with Vercingetorix — the legendary folk hero of the French nation, the symbol of defiance against one’s oppressors and an inspiration to freedom fighters everywhere –being strangled.

Meanwhile, back in Gaul, Alesia was forgotten, and eventually became a lost city. It is as if the trauma of such a defeat, in which all the major Gallic tribes were defeated at one blow and brought to their knees, incited the Gauls to erase their past and make the site of their humiliation as if it had never been. Ironically, when I went to the Internet Classics Archive to find Caesar’s description of this lost city, I found the chapters which cover Caesar’s siege of Alesia to be completely missing. The online text ends Book Seven of Julius Caesar’s Commentaries on the Gallic and Civil Wars just before the action commences, and begins Book Eight just after the Gauls are subdued, while the intervening thirty chapters appear to have simply disappeared into the virtual ether.

In the 19th century, the French government commissioned archaeologists to rediscover Alesia, and they eventually selected a site near Dijon, today called Alise-Sainte-Reine, as the likely location. The only problem with the site is that it does not match Caesars description of Alesia, and Caesar’s writings about Alesia is the main source for everything we know about the city and the battle.

I have rooted around in my basement in order to dig up an unredacted copy of Caesar’s Commentaries, containing everything we remember about the lost city of Alesia:

The town itself was situated on the top of a hill, in a very lofty position, so that it did not appear likely to be taken, except by a regular siege. Two rivers, on two different sides, washed the foot of the hill. Before the town lay a plain of about three miles in length; on every other side hills at a moderate distance, and of an equal degree of height, surrounded the town. The army of the Gauls had filled all the space under the wall, comprising a part of the hill which looked to the rising sun, and had drawn in front a trench and a stone wall six feet high. The circuit of that fortification, which was commenced by the Romans, comprised eleven miles. The camp was pitched in a strong position, and twenty-three redoubts were raised in it, in which sentinels were placed by day, lest any sally should be made suddenly; and by night the same were occupied by watches and strong guards.

— Commentaries, Book VII, Chapter 69

Never Mark Antony

“The past is a foreign country,” as Leslie Poles Hartley pointed out, but they don’t always do things differently there. It is a peculiar feeling, especially to one who views history through the dual prisms of Heidegger and Foucault, to find the distant past thoroughly familiar. Such is my experience in reading Elizabethan poet John Cleveland’s Marc Antony, in which the refrain is both trite and profound, and perhaps only matched in this characteristic by Eliot’s refrain from The Lovesong of J. Alfred Proofrock, as well as a few of the better tracks off of Bob Dylan’s Desire.

Whenas the nightingale chanted her verses

And the wild forester couch’d on the ground,

Venus invited me in the evening whispers

Unto a fragrant field with roses crown’d,

Where she before had sent

My wishes’ complement;

Unto my heart’s content

Play’d with me on the green.

Never Mark Antony

Dallied more wantonly

With the fair Egyptian Queen.

First on her cherry cheeks I mine eyes feasted,

Thence fear of surfeiting made me retire;

Next on her warmer lips, which, when I tasted,

My duller spirits made me active as fire.

Then we began to dart,

Each at another’s heart,

Arrows that knew no smart,

Sweet lips and smiles between.

Never Mark Antony

Dallied more wantonly

With the fair Egyptian Queen.

Wanting a glass to plait her amber tresses,

Which like a bracelet rich decked mine arm,

Gaudier than Juno wears whenas she graces

Jove with embraces more stately than warm,

Then did she peep in mine

Eyes’ humor crystalline;

I in her eyes was seen

As if we one had been.

Never Mark Antony

Dallied more wantonly

With the fair Egyptian Queen.

Mystical grammar of amorous glances;

Feeling of pulses, the physic of love;

Rhetorical courtings and musical dances;

Numbering of kisses arithmetic prove;

Eyes like astronomy;

Straight-limb’d geometry;

In her arts’ ingeny

Our wits were sharp and keen.

Never Mark Antony

Dallied more wantonly

With the fair Egyptian Queen.

History Blinks

Concerning history, Pascal wrote “Le nez de Cléopatre: s’il eût été plus court, toute la face de la terre aurait changé.” Numistmatics have recently begun to challenge this precept, however, with the discovery of less flattering profiles of the Egyptian queen. Earlier this year, academics at the University of Newcastle announced that by studying an ancient denarius, they arrived at the conclusion that the Queen of the Nile was rather thin-lipped and hook-nosed. Looking at pictures of the denarius in question, however, one cannot help but feel that perhaps the coin itself has undergone a bit of distortion over the years.

Given that history distorts, it seems peculiar that we would place so much faith in the clarity of twenty-twenty hindsight. Perhaps this is intended as a contrast with the propensity to error that befalls us when we attempt proclamations of foresight. Yet even the clarity of hindsight regarding recent events is often, in turn, contrasted with the objectivity achieved when we put a few hundred years between ourselves and the events we wish to put under the investigative eye. Is there an appropriate period of time after which we can say that clarity has been achieved, shortly before that counter-current of historical distortion takes over and befuddles the mind, like the last beer too many at the end of a long night?

Looking back is often a reflexive act that allows us to regret, and thus put away, our past choices. Usually, as Tolstoy opines in his excursis to War and Peace, distance provides a viewpoint that demonstrates the insignificance of individual actions, and the illusory nature of choice. It is only in the moment that Napolean appears to guide his armies over the battlefield to certain victory. With the cool eye of recollection, he is seen to be a man merely standing amid the smoke of battle giving instructions that may or may not reach their destinations, while the battle itself is simply the aggregation of tens of thousands of individual struggles.

And yet, in the cross-currents of history looking forward and historians looking backward, one occassionally finds eddies in which the hand of history casts our collective fates with only a handful of lots. Such an eddy occurred in late 2000, and, in retrospect, it changed the face of the world. With an oracular — and possibly slightly tipsy — pen, the late Auberon Waugh was there to capture the moment for posterity:

Many Europeans may find it rather hurtful that the United States has lost all interest in Europe, as we are constantly reminded nowadays, but I think it should be said that by no means all Americans have ever been much interested in us. Only the more sophisticated or better educated were aware of the older culture from which so many of their brave ideas about democracy derived.

Perhaps the real significance of the new development is that Americans of the better class have been driven out of the key position they once held, as happened in this country after the war, leaving decisions to be made by the more or less uneducated. We owe both classes of American an enormous debt of gratitude for having saved us from the evils of Nazism and socialism, and we should never forget that. It is no disgrace that George W. Bush has never been to Europe; 50 per cent of Americans have never been abroad. They have everything they need in their own country, but their ignorance of history seems insurmountable.

Everything will be decided in Florida, but it is too late to lecture the inhabitants about the great events of world history which brought them to their present position in world affairs. Florida is a strange and dangerous place to be. It has killer toads and killer alligators. An article in the Washington Post points out that it is also the state where one is most likely to be killed by lightning. Most recently a man in south central Florida was convicted of animal abuse for killing his dog because he thought it was gay. The state carried out a long love affair with the electric chair which it stopped only recently, and somewhat reluctantly, in the face of bad publicity when people’s heads started bursting into flames.

George W. Bush’s considerable experience of the death penalty in Texas may help him here, but I feel we should leave the Americans to make up their own minds on the point. If we had a choice in the matter, I would like to think we would all choose the most venerable candidate, Senator Strom Thurmond (or Thurman if you follow caption writers in The Times) who is 97 years old. If the other candidates cannot reach a decision by Inauguration Day on January 20, he will swear the oath himself. These young people may have many interesting features, of course, and Al Gore’s hairstyle might give us something to think about, but one wearies of them after a while.

Surrendering to Technology

My wife pulled into the garage yesterday after a shopping trip and called me out to her car to catch the tail end of a vignette on NPR about the Theater of Memory tradition Frances Yates rediscovered in the 60’s — a subject my wife knows has been a particular interest of mine since graduate school. The radio essayist was discussing his attempt to create his own memory theater by forming the image of a series of rooms in his mind and placing strange mnemonic creatures representing different things he wanted to remember in each of the corners. Over time, however, he finally came to the conclusion that there was nothing in his memory theater that he couldn’t find on the Internet and, even worse, his memory theater had no search button. Eventually he gave up on the Renaissance theater of memory tradition and replaced it with Google.

I havn’t read Yates’s The Art of Memory for a long time, but it seemed to me that the guy on the radio had gotten it wrong, somehow. While the art of memory began as a set of techniques allowing an orator to memorize topics about which he planned to speak, often for hours, over time it became something else. The novice rhetorician would begin by spending a few years memorizing every nook and cranny of some building until he was able to recall every aspect of the rooms simply by closing his eyes. Next he would spend several more years learning the techniques to build mnemonic images which he would then place in different stations of his memory theater in preparation for an oration. The rule of thumb was that the most memorable images were also the most outrageous and monstrous. A notable example originating in the Latin mnemonic textbook Ad Herennium is a ram’s testicles used as a place holder for a lawsuit, since witnesses must testify in court, and testify sounds like testicles.

As a mere technique, the importance of the theater of memory waned with the appearance of cheap paper as a new memory technology. Instead of working all those years to make the mind powerful enough to remember a multitude of topics, topics can now be written down on paper and recalled as we like. The final demise of the theater of memory is no doubt realized in the news announcer who reads off a teleprompter, being fed words to say as if they were being drawn from his own memory. This is of course an illusion, and the announcer is merely a host for the words that flow through him.

A variation on the theater of memory not obviated by paper began to be formulated in the Renaissance in the works of men like Marsilio Ficino, Giulio Camillo, Giordano Bruno, Raymond Lull, and Peter Ramus. Through them, the theater of memory was integrated with the Hermetic tradition, and the mental theater was transformed into something more than a mere technique for remembering words and ideas. Instead, the Hermetic notion of the microcosm and macrocosm, and the sympathetic rules that could connect the two, became the basis for seeing the memory theater as a way to connect the individual with a world of cosmic and magical forces. By placing objects in the memory theater that resonate with the celestial powers, the Renaissance magus was able to call upon these forces for insight and wisdom.

Since magic is not real, even these innovations are not so interesting on their own. However the 18th century thinker, Giambattista Vico, both a rationalist and someone steeped in the traditions of Renaissance magic, recast the theater of memory one more time. For Vico, the memory theater was not a repository for magical artifacts, but rather something that is formed in each of us through acculturation; it contains a knowledge of the cultural institutions, such as property rights, marriage, and burial (the images within our memory theaters), that are universal and make culture possible. Acculturation puts these images in our minds and makes it possible for people to live together. As elements of our individual memory theaters, these civilizing institutions are taken to be objects in the world, when in actuality they are images buried so deeply in our memories that they exert a remarkable influence over our behavior.

Some vestige of this notion of cultural artifacts can be found in Richard Dawkins’s hypothesis about memes as units of culture. Dawkins suggests that our thoughts are made up, at least in part, of memes that influence our behavior in irrational but inexorable ways. On analogy with his concept of genes as selfish replicators, he conceives of memes as things seeking to replicate themselves based on rules that are not necessarily either evident or rational. His examples include, at the trivial end, songs that we can’t get out of our heads and, at the profound end, the concept of God. For Dawkins, memes are not part of the hardwiring of the brain, but instead act like computer viruses attempting to run themselves on top of the brain’s hardware.

One interesting aspect of Dawkins’s interpretation of the spread of culture is that it also offers an explanation for the development of subcultures and fads. Subcultures can be understood as communities that physically limit the available vectors for the spread of memes to certain communities, while fads can be explained away as short-lived viruses that are vital for a while but eventually waste their energies and disappear. The increasing prevalence of visual media and the Internet, in turn, increase the number of vectors for the replication of memes, just as increased air-travel improves the ability of real diseases to spread across the world.

Dawkins describes the replication of memetic viruses in impersonal terms. The purpose of these viruses is not to advance culture in any way, but rather simply to perpetuate themselves. The cultural artifacts spread by these viruses are not guaranteed to improve us, no more than Darwinian evolution offers to make us better morally, culturally or intellectually. Even to think in these terms is a misunderstanding of the underlying reality. Memes do not survive because we judge them to be valuable. Rather, we deceive ourselves into valuing them because they survive.

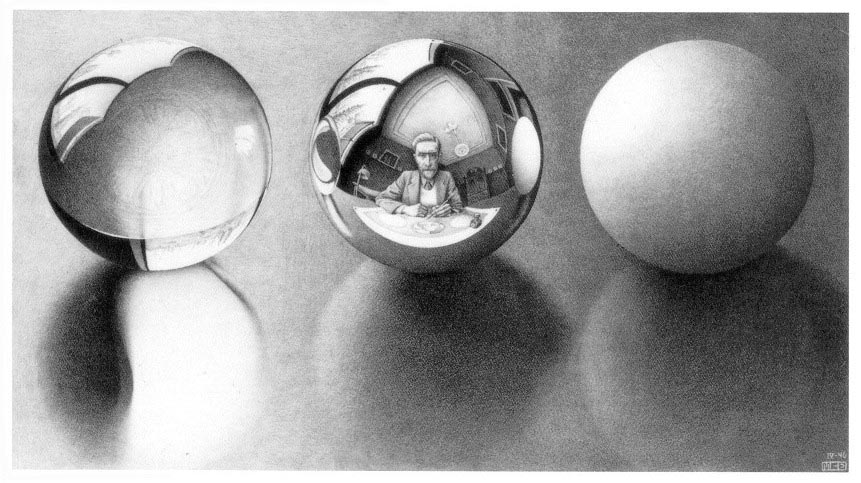

How different this is from the Renaissance conception of the memory theater, for which the theater existed to serve man, instead of man serving simply to host the theater. Ioan Couliano, in the 80’s, attempted to disentangle Renaissance philosophy from its magical trappings to show that at its root the Renaissance manipulation of images was a proto-psychology. The goal of the Hermeticist was to cultivate and order images in order to improve both mind and spirit. Properly arranged, these images would help him to see the world more clearly, and allow him to live in it more deeply.

For after all what are we but the sum of our memories? A technique for forming and organizing these memories — to actually take control of our memories instead of simply allowing them to influence us willy-nilly — such as the Renaissance Hermeticists tried to formulate could still be of great use to us today. Is it so preposterous that by reading literature instead of trash, by controlling the images and memories that we allow to pour into us, we can actually structure what sort of persons we are and will become?

These were the ideas that initially occurred to me when I heard the end of the radio vignette while standing in the garage. I immediately went to the basement and pulled out Umberto Eco’s The Search For The Perfect Language, which has an excellent chapter in it called Kabbalism and Lullism in Modern Culture that seemed germane to the topic. As I sat down to read it, however, I noticed that Doom, the movie based on a video game, was playing on HBO, so I ended up watching that on the brand new plasma TV we bought for Christmas.

The premise of the film is that a mutagenic virus (a virus that creates mutants?) is found on an alien planet that starts altering the genes of people it infects and turns them into either supermen or monsters depending on some predisposition of the infected person’s nature. (There is even a line in the film explaining that the final ten percent of the human genome that has not been mapped is believed to be the blueprint for the human soul.) Doom ends with “The Rock” becoming infected and having to be put down before he can finish his transformation into some sort of malign creature. After that I pulled up the NPR website in order to do a search on the essayist who abandoned his memory theater for Google. My search couldn’t find him.

Giulio Camillo, father of the Personal Computer

I am not the first to suggest it, but I will add my voice to those that want to claim that Giulio Camillo built the precursor of the modern personal computer in the 16th century. Claiming that anyone invented anything is always a precarious venture, and it can be instructive to question the motives of such attempts. For instance, trying to determine whether Newton or Leibniz invented calculus is a simple question of who most deserves credit for this remarkable achievement.

Sometimes the question of firsts is intended to reveal something that we did not know before, such as Harold Bloom’s suggestion that Shakespeare invented the idea of personality as we know it. In making the claim, Bloom at the same time makes us aware of the possibility that personality is not in fact something innate, but something created. Edmund Husserl turns this notion on its head a bit with his reference in his writings to the Thales of Geometry. Geometry, unlike the notion of personality, cannot be so easily reduced to an invention, since it is eidetic in nature. It is always true, whether anyone understands geometry or not. And so there is a certain irony in holding Thales to be the originator of Geometry since Geometry is a science that was not and could not have been invented as such. Similarly, each of us, when we discover the truths of geometry for ourselves, becomes in a way a new Thales of Geometry, having made the same observations and realizations for which Thales receives credit.

Sometimes the recognition of firstness is a way of initiating people into a secret society. Such, it struck me, was the case when I read as a teenager from Stephen J. Gould that Darwin was not the first person to discover the evolutionary process, but that it was in fact another naturalist named Alfred Russel Wallace, and suddenly a centuries long conspiracy to steal credit from the truly deserving Wallace was revealed to me — or so it had seemed at the time.

Origins play a strange role in etymological considerations, and when we read Aristotle’s etymological ruminations, there is certainly a sense that the first meaning of a word will somehow provide the key to understanding the concepts signified by the word. There is a similar intuition at work in the discusions of ‘natural man’ to be found in the political writings of Hobbes, Locke and Rousseau. For each, the character of the natural man determines the nature of the state, and consequently how we are to understand it best. For Hobbes, famously, the life of this kind of man is rather unpleasant. For Locke, the natural man is typified by his rationality. For Rousseau, by his freedom. In each case, the character of the ‘natural man’ serves as a sort of gravitational center for understanding man and his works at any time. I have often wondered whether the discussions of the state of the natural man were intended as a scientific deduction or rather merely as a metaphor for each of these great political philosophers. I lean toward the latter opinion, in which case another way to understand firsts is not as an attempt to achieve historical accuracy, but rather an attempt to find the proper metaphor for something modern.

So who invented the computer? Was it Charles Babbage with his Difference Engine in the 19th century, or Alan Turing in the 20th with his template for the Universal Machine? Or was it Ada Lovelace, as some have suggested, the daughter of Lord Byron and collaborator with Charles Babbage who possibly did all the work while Babbage receives all the credit?

My question is a simpler one: who invented the personal computer, Steve Jobs or Giulio Camillo. I award the laurel to the Camillo, who was known in his own time as the Divine Camillo because of the remarkable nature of his invention. And in doing so, of course, I merely am attempting to define what the personal computer really is — the gravitational center that is the role of the personal computer in our lives.

Giulio Camillo spent long years working on his Memory Theater, a miniaturized Vitruvian theater still big enough to walk into, basically a box, that would provide the person who stood before it the gift most prized by Renaissance thinkers: the eloquence of Cicero. The theater itself was arranged with images and figures from greek and roman mythology. Throughout it were Christian references intermixed with Hermetic and Kabalistic symbols. In small boxes beneath various statues inside the theater fragments and adaptations of Cicero’s writings could be pulled out and examined. Through the proper physical arrangment of the fantastic, the mythological, the philosophical and the occult, Camillo sought to provide a way for anyone who stepped before his theater be able to discourse on any subject no less fluently and eloquently than Cicero himself.

Eloquence in the 16th century was understood as not only the ability of the lawyer or statesman to speak persuasively, but also the ability to evoke beautiful and accurate metaphors, the knack for delighting an audience, the ability to instruct, and mastery of diverse subjects that could be brought forth from the memory in order to enlighten one’s listeners. Already in Camillo’s time, mass production of books was coming into its own and creating a transformation of culture. Along with it, the ancient arts of memory and of eloquence (by way of analogy we might call it literacy, today), whose paragon was recognized to be Cicero, was in its decline. Thus Camillo came along at the end of this long tradition of eloquence to invent a box that would capture all that was best of the old world that was quickly disappearing. He created, in effect, an artificial memory that anyone could use, simply by stepping before it, to invigorate himself with the accumulated eloquence of all previous generations.

And this is how I think of the personal computer. It is a box, occult in nature, that provides us with an artificial memory to make us better than we are, better than nature made us. Nature distributes her gifts randomly, while the personal computer corrects that inherent injustice. The only limitation to the personal computer, as I see it, is that it can only be a repository for all the knowledge humanity has already acquired. It cannot generate anything new, as such. It is a library and not a university.

Which is where the internet comes in. The personal computer, once it is attached to the world wide web, becomes invigorated by the chaos and serendipity that is the internet. Not only do we have the dangerous chaos of viruses and trojan horses, but also the positive chaos of online discussions, the putting on of masks and mixing with the online personas of others, the random following of links across the internet that ultimately leads us to make new connections between disparate concepts in ways that seem natural and inevitable.

This leads me to the final connection I want to make in my overburdened analogy. Just as the personal computer is not merely a box, but also a doorway to the internet, so Giulio Camillo’s Theater of Memory was tied to a Neoplatonic worldview in which the idols of the theater, if arranged properly and fittingly, could draw down the influences of the intelligences, divine beings known variously as the planets (Mars, Venus, etc.), the Sephiroth, or the Archangels. By standing before Camillo’s box, the spectator was immediately plugged into these forces, the consequences of which are difficult to assess. There is danger, but also much wonder, to be found on the internet.